How AI Changes How We Work

Why clarity of thought becomes the new bottleneck

We are on the precipice of work being fundamentally disrupted, and I’m sure you know why. AI has become the hottest new buzzword over the past 2 years, and rightfully so. It is being woven into the fabric of our products, our communications, and our lives.

But at work, it does seem to be business as usual, with a looming fear that AI will replace many jobs.

You have probably heard that AI will take a ton of office jobs. Some companies like Shopify are becoming AI-first, where before a team decides to hire a new employee, they must first justify why they cannot use AI to do that role.

It is a very interesting time to work in technology, but more broadly, it’s an interesting time to be an office worker.

AI in its current form is a tool by which to offload work. Many people are leveraging it to supercharge their workflows, tackle tedious work, or even vibe code a prototype for their new app. While there are many who claim to be AI-experts, the reality is that everyone is just experimenting with AI to see how and where it can improve their work and lives. And few are doing it effectively. A study by MIT recently found that 95% of AI pilots within companies are unsuccessful, which is not particularly surprising, considering knowledge work is much more complicated than we often give it credit for.

Despite these negative headlines, I personally believe that AI will fundamentally change the way we work. But in order to do this, we need to first understand how AI can be leveraged as an effective tool.

Before we begin

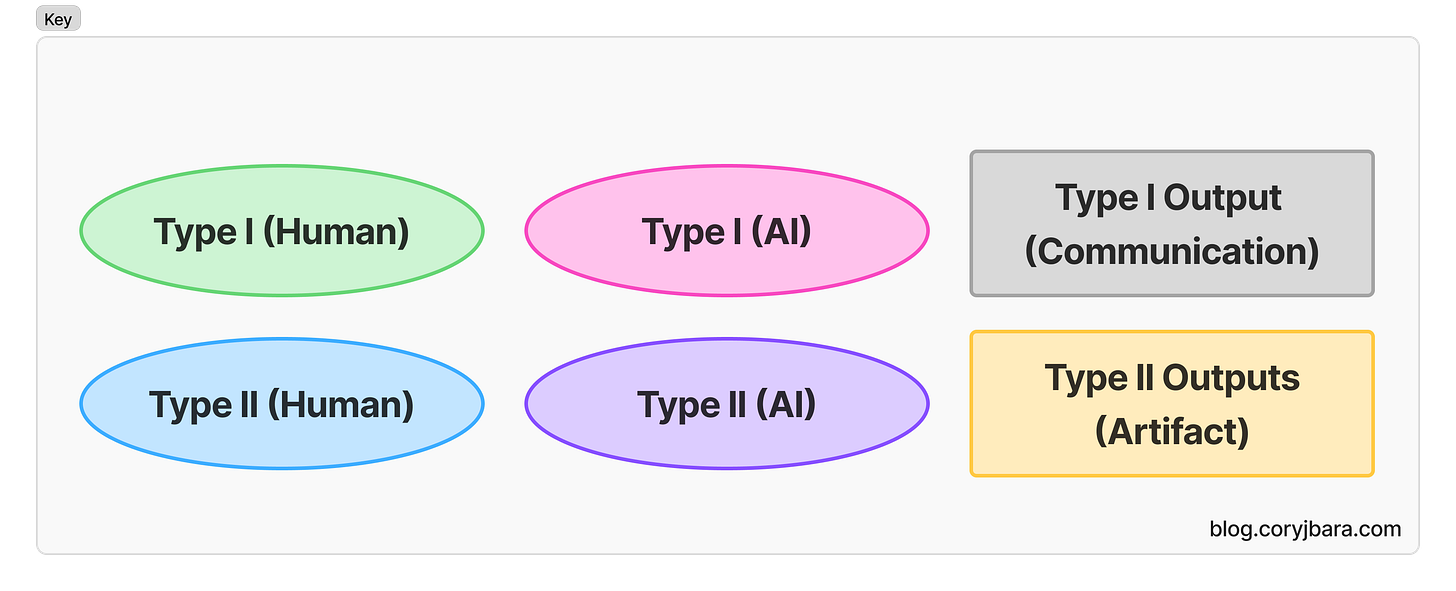

Before we continue, if you haven’t yet read my article about Type I and Type II work, I would recommend you do so, as it’s my framework about “work” that I will be referencing heavily in this article. I will be showing a number of diagrams to illustrate how I am currently thinking about work. This will make more sense if you understand the color-coding, so below is the key to all of the diagrams. Now, onto the article.

Understanding and Breaking Down Work

In my mind, the two issues that most of these failed pilot programs run into are: understanding and breaking down the work, and overcoming the AI context burden.

When thinking about work, there’s always been a gap in my mind between “deciding what work to do” and actually “doing it”. I outlined these distinctions more deeply in my article Type I and Type II work. In summary, there are two types of work:

Type I → Strategy, decisions, direction, heading. This is the phase where you have a blank canvas, and you have to make the decision of what to paint.

Type II → Doing, executing, implementing. In other words: actually painting.

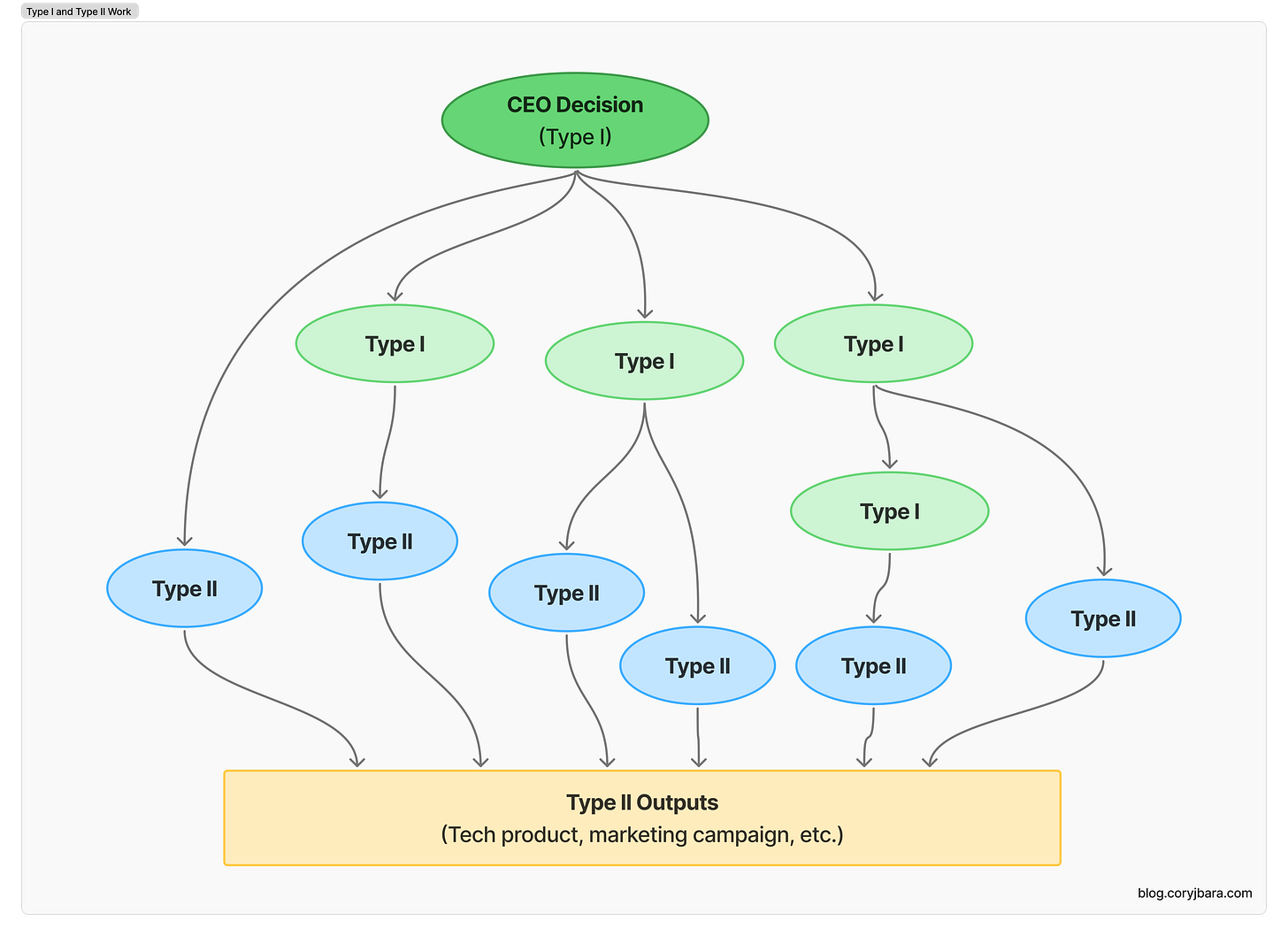

When you look at a corporate strategy or decision, the work it produces often looks like this:

A corporate structure exists because there is a gap between the time it takes to do Type I work and Type II work. Leaders decide what goals they want the company to accomplish, the employees figure out what work needs to be done to accomplish it, and then someone does the actual work. It’s often weeks or months after the original decision is made that the Type II outputs are produced (in other words, until the work is done).

Each of the bubbles (nodes) is a unit of work, and each of the arrows is some sort of communication between employees. The more branches in the tree, the more concurrent streams of work. And the more nodes and arrows per branch, the more dependencies within that work stream.

In other words: the bigger the tree, the more complex the communication.

While my tree above is a relatively simple one, I could imagine a CEO decision like “Google transitioning from a search company to an AI company” would entail thousands of branches, each with hundreds of nodes.

It takes time to go from idea → execution, the more ambitious the project, the more ambiguous the requirements, and the more people involved, the more complex the network of “work” gets. And with each bit of complexity, the more work there is to be done.

In the past, the only way to do more work or take on more ambitious projects was to hire more people. But as I have been alluding to, recent advances in AI have created a paradigm shift in how work works. In order to understand how this shift affects how we all work, we need to first understand how “AI work” works.

The Gradual Path to AI Integration

I have heard people say that AI agents are going to take over all office work. In other words, they think that someone at a company decides to do something, and they will then spin off a number of AI Agents to do the work, like so:

Not only do I think this is unlikely, but I think it requires a fundamentally different approach to understand what work is. Sure, people are currently able to offload individual tasks to AI, but it is a highly nuanced and context-dependent process (more on that later).

While it may be possible to eventually have an AI-only company, it requires a lot of work to understand the business to such a degree that you can clearly articulate each node of work. Going from high-level Type I goal to low-level Type II outputs to accomplish that goal is a hard task. And it is nearly impossible to map out all of that work, let alone articulate it clearly.

Company leadership is hard. Even with a small group of employees, it’s hard to understand exactly what you should be doing in your business. And if it’s hard enough to articulate the company strategy and execution plan to employees, it’s even harder to explain it to an army of AI agents.

This is why I don’t think we will go directly from a traditional org structure to the above AI-only structure. Instead of a wholesale shift, the transition from no-AI to AI-only will be a step-by-step, gradual process. We will be automating one node of work at a time.

AI will start from the bottom of the work tree and work its way up. In other words, AI will start from the bottom nodes of Type II work, and slowly work its way up to doing Type I work.

Teams will evolve organically from no AI to AI-enabled to AI-dependent. Perhaps someday, we will get to AI-only, but I think the more realistic end state is a hybrid model, where AI is integrated seamlessly into the work tree, where decisions (Type I work) are still made by people, and the AI is able to fill in the gaps in execution (i.e. Type II work).

It’s easy to say this at a high level, but a more helpful exercise may be to visualize the AI transition with an example.

Example: How AI Will Be Integrated

One big piece of a VC analyst’s job is to find and validate companies for investment. I would classify that as Type II work, because there is a tangible artifact at the end of the process (a validated list of companies to fund). This feels like a tedious and possibly automate-able task, ripe for AI to take on.

Despite one person doing this task within a VC fund, it is not a single unit of work in my mind. I would argue that this is two fundamentally different units of Type II work: the first is finding companies, and the second is validating those companies to ensure they fit within the VC’s investment thesis. Each task is actually independent, and each can produce its own artifact.

Both are challenging pieces of work, and arguably, AI could do each of the tasks. However, we don’t have to have AI do both. By splitting the “work” into discrete units of output, it allows either of the tasks to be offloaded to AI. Some would call this an “AI-automated workflow” or an “agentic workflow” (both are hot buzzwords right now), but it’s really just offloading tedious Type II work to AI.

The question is, to what extent should AI automate this work? I’ve included some options below:

I would argue the ideal option is probably the middle option. AI would do the first task of finding companies, because AI is great at quickly sifting through massive amounts of data, exactly the kind of skill that is useful for compiling a list of companies. However, I am not sure AI will be able to validate companies based on the fund’s investment thesis, so we still need the analyst to do the list validation.

This leads to another question with AI-work: do you trust the Type II Output? In other words, to what extent do you trust AI to do the work correctly?

Even if AI were able to find and validate the list of companies itself without an analyst, I am not sure any right-minded VC would blindly invest in a company that an AI agent came up with. Certainly not without doing their own research and due diligence.

Perhaps there is a way to have AI do the initial sweep of validation: enriching each company in the list with notes, tags, summaries, and creating an initial ranking of whether or not it thinks the VC fund should invest. Then the analyst still goes through and does additional validation and sorting. But even so, AI is just used to limit the search space, and the decision-making process still lies in the hands of people.

The goal of using AI is not to offload work, but to get a specific Type II output. Not only do you have to delegate the work to AI, but you also must validate that the output of the AI meets the criteria for the goal you set. It’s not enough to just offload the work, you have to offload the work well.

In theory, delegating to AI should be the same as delegating to a person. Effective delegation includes describing both the desired outcome (the Type II artifact) as well as the context from up the chain of work (the Type I output). But often in this delegation process, we overlook the context we naturally have as humans. A person has both the explicit context from the Type I output, as well as the implicit context from being an employee at a company. The burden we have in delegating to AI is that we must make the implicit context explicit.

The AI Context Burden

AI is great at doing Type II work, because there is very little ambiguity, and a very clear artifact to produce. The problem with ambiguity is not that AI can’t figure it out, but rather that it doesn’t have enough context.

Granted, a person probably couldn’t do the work without additional context either. Often we take our own context for granted. What context, exactly?

When we join a company, we are onboarded. We are introduced to the team. We meet the executives. We get a sense of why the company exists, we learn the mission statement, we internalize what we are doing there. We have purpose to our work.

There are subtle cues that allow us to understand our place within the company, and our role within the team. We learn about the company’s complex strategic goals, and the milestones they have set out for themselves. We begin to understand how work is done, the processes that have been put in place, and how we can effectively assimilate into the culture.

We bring general knowledge from past jobs, and specific knowledge from the industry we are joining. We begin to understand how each of the pieces of a company work together. And over time, we gain more and more context, until we are comfortable enough to add context to the shared knowledge within a company. We begin doing work, accomplishing goals, producing outputs, all the while we are learning. Every time we do work, we gain more context. Over time, we retain this context, and can leverage it the next time we do more work.

On the flip side, AI currently exists in the context of a single chat. Very little context is retained across chats, so it’s like starting from scratch every time you open a new Claude or ChatGPT window. While some systems like Claude integrations can proactively fetch your company documents to find the context it needs to do a task effectively1, for the most part, AI has no context outside of what you explicitly give it in that chat session. However, I still see people lazily prompting AI to “write me an email to a client” and being dissatisfied with the generic result.

It’s no wonder to me that 95% of AI pilots failed within companies, let alone seeing AI fully replace employees. We aren’t giving the AI enough context to succeed. It’s like hiring someone without telling them about the company, giving them no onboarding, not introducing them to the team, and then telling them to accomplish a task. Good managers know that this will not lead to good results, and yet we continue to prompt generically regardless, expecting the supercharged oracle to solve the problems we have not yet solved ourselves.

The rule of thumb I give for prompting is Generic in → generic out.

The biggest challenge is not just using AI, but giving it enough context to do the work successfully. And in order to get value out of AI, you have to brief it as you would an employee.

Briefing AI like an Employee

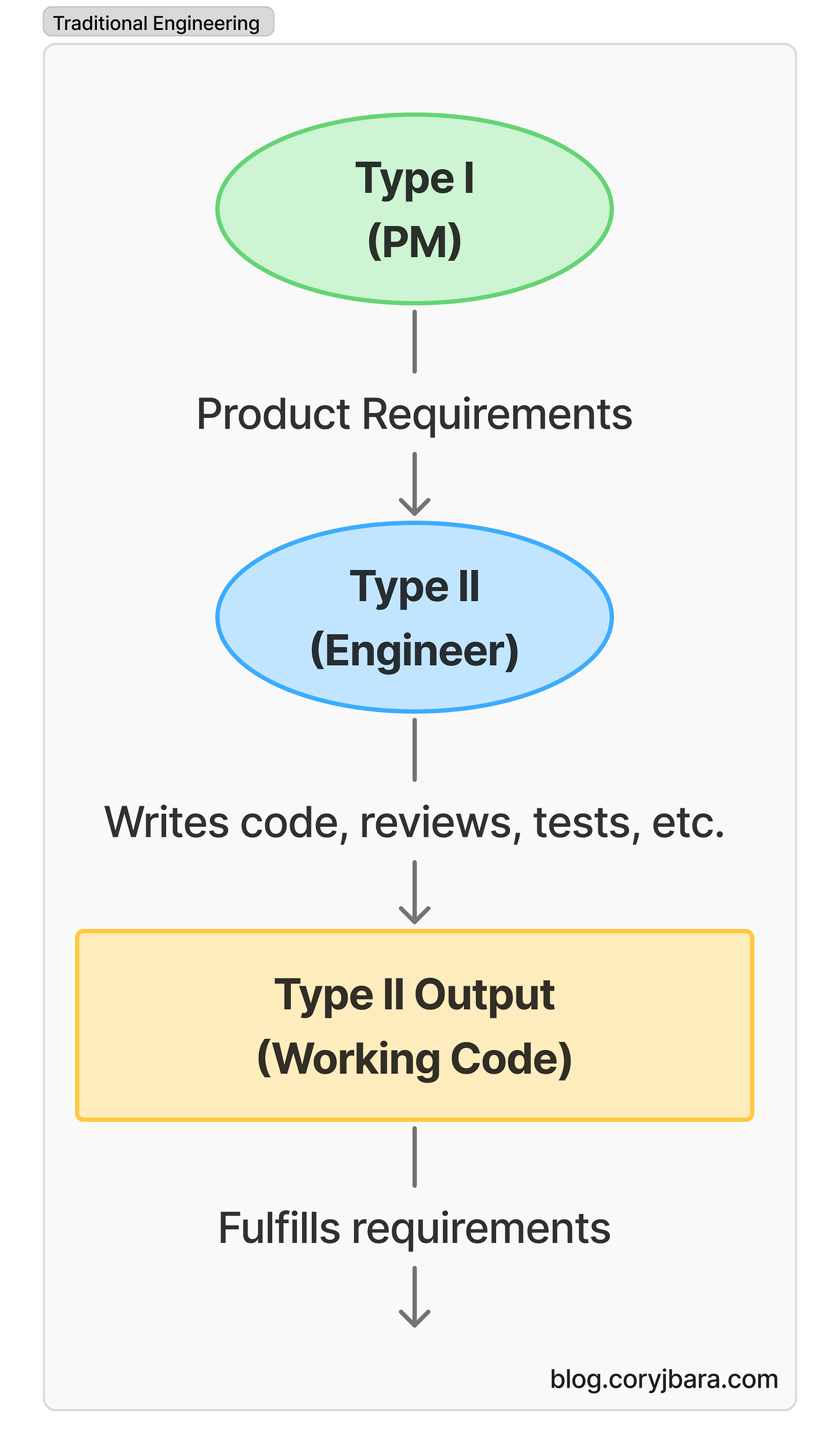

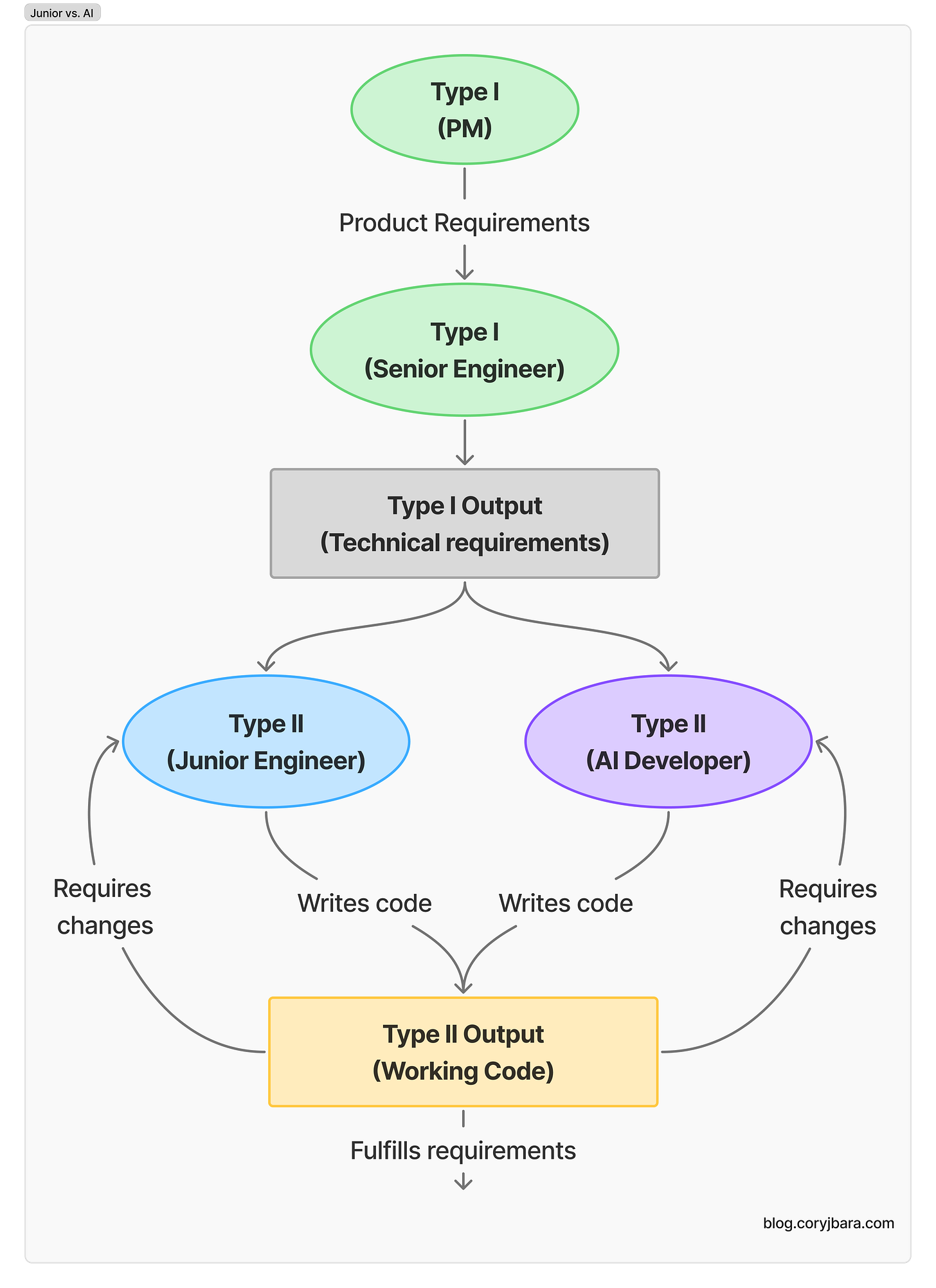

Let’s take the example of an engineering team building a new product. In the pre-AI world, the workflow may look something like this:

A product manager decides what to build, they communicate those requirements to a senior engineer, who understands how to build it. At the end, there is a Type II output (a working feature).

Type I work is all about translating requirements, making decisions, adding an additional bit of context, and converting it into more understandable requirements downstream. Sometimes this output is written communication, sometimes it is verbal, and sometimes it all happens in your head.

Product requirements are a Type I output, because they communicate the desired end state. A good engineer takes these Type I product requirements, translates them into the technical specifications (an additional Type I output), and then builds the product. They do the implicit Type I work in their head of translating the product requirements to technical requirements, and then they do the Type II work of actually writing the code and building the product.

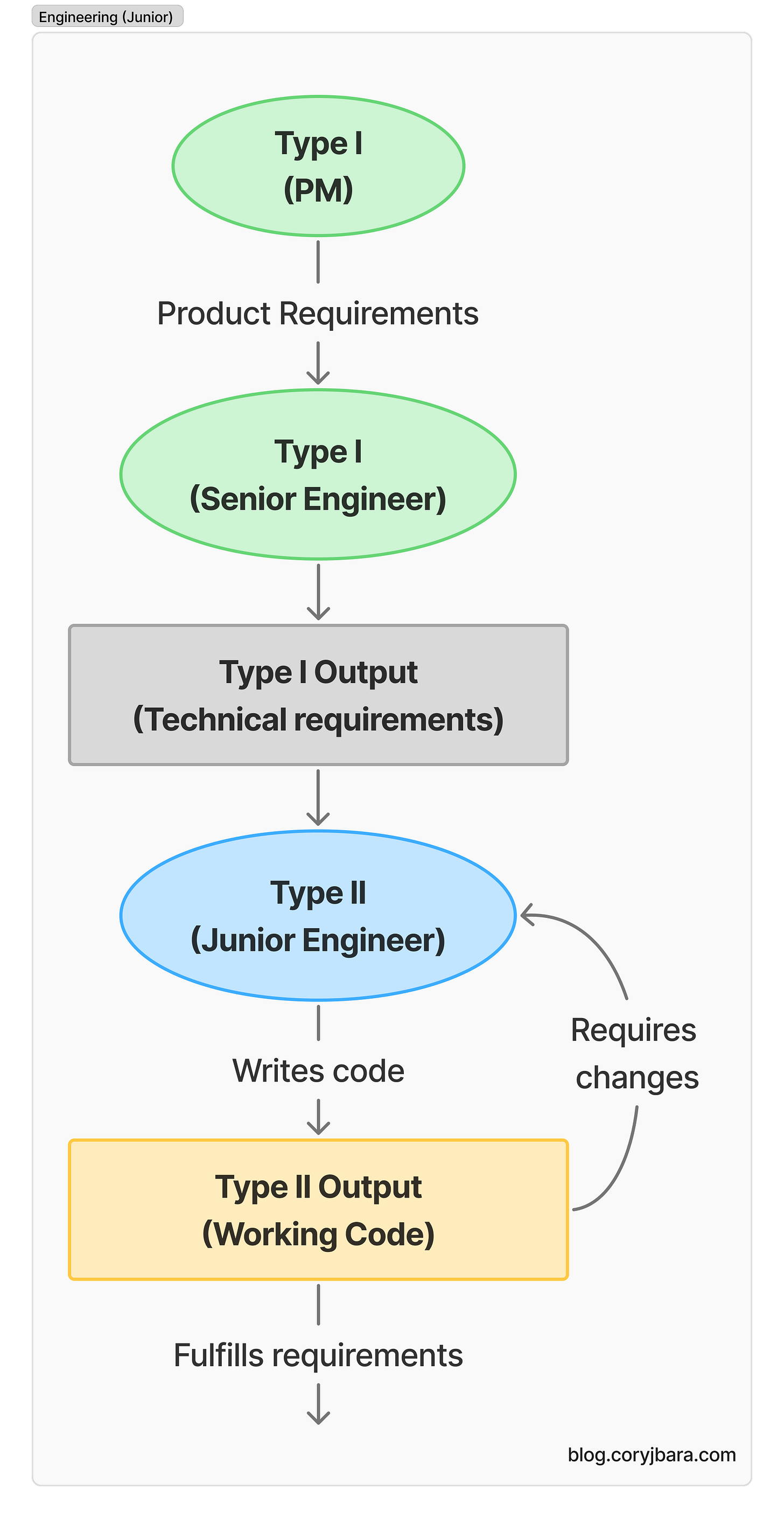

But let’s say we have a junior engineer on the team, one who understands the concepts, but does not have the experience to properly translate the product requirements into a real feature.

In this case, we need some explicit Type I engineering work. In other words, we need someone to translate the product requirements into technical requirements. And who better than a senior engineer to do this Type I work:

Ideally, in a mentor-heavy culture, you would have the senior engineer teach the junior engineer how to translate these requirements themselves: how to think about the flow of information, how to model the data in the database, what APIs are necessary, how the system architecture works, etc. In other words, teach a man to fish2.

The junior then takes both pieces of Type I output (product and technical requirements) and does the Type II work of coding. While there may be some changes required after they write their code, after a few rounds of revisions, the code fulfills all requirements, and everyone moves on to the next project.

This has been the way of engineering teams for years.

However, with the introduction of AI tools such as Cursor or Claude Code, there is a paradigm shift in how development teams work and are structured. No longer does an engineer have to delegate tasks to other engineers, but rather, they will begin to delegate the tasks to highly skilled AI developers. So the flow could look like either of these branches:

I see both of these paths as viable ways of building software. AI developers are comparable to highly skilled junior developers. AI has infinite global knowledge and no company context, and a junior engineer has little global knowledge but a good amount of company context.

But while the junior engineer path may take a week to get to the Type II output, the AI developer path is near-instantaneous. Additionally, companies have to choose between paying ~$100,000 (or more) to hire a junior developer, or paying $20/person/month for an AI development tool. I imagine most tech companies would salivate at the more knowledgeable, cheaper, faster path.

The downside is that AI does not care about your product, AI cannot understand your company culture and mission, and AI will not always learn and grow in the same way a junior engineer can. So while you are leveraging it for its output, AI will rarely come up with good new ideas in the same way a good employee will.

But the economic reality is that most companies will opt for the supercharged senior engineer using AI tools over investing time, money, and resources in a junior developer’s growth.

Delegation and Agentic Coordination

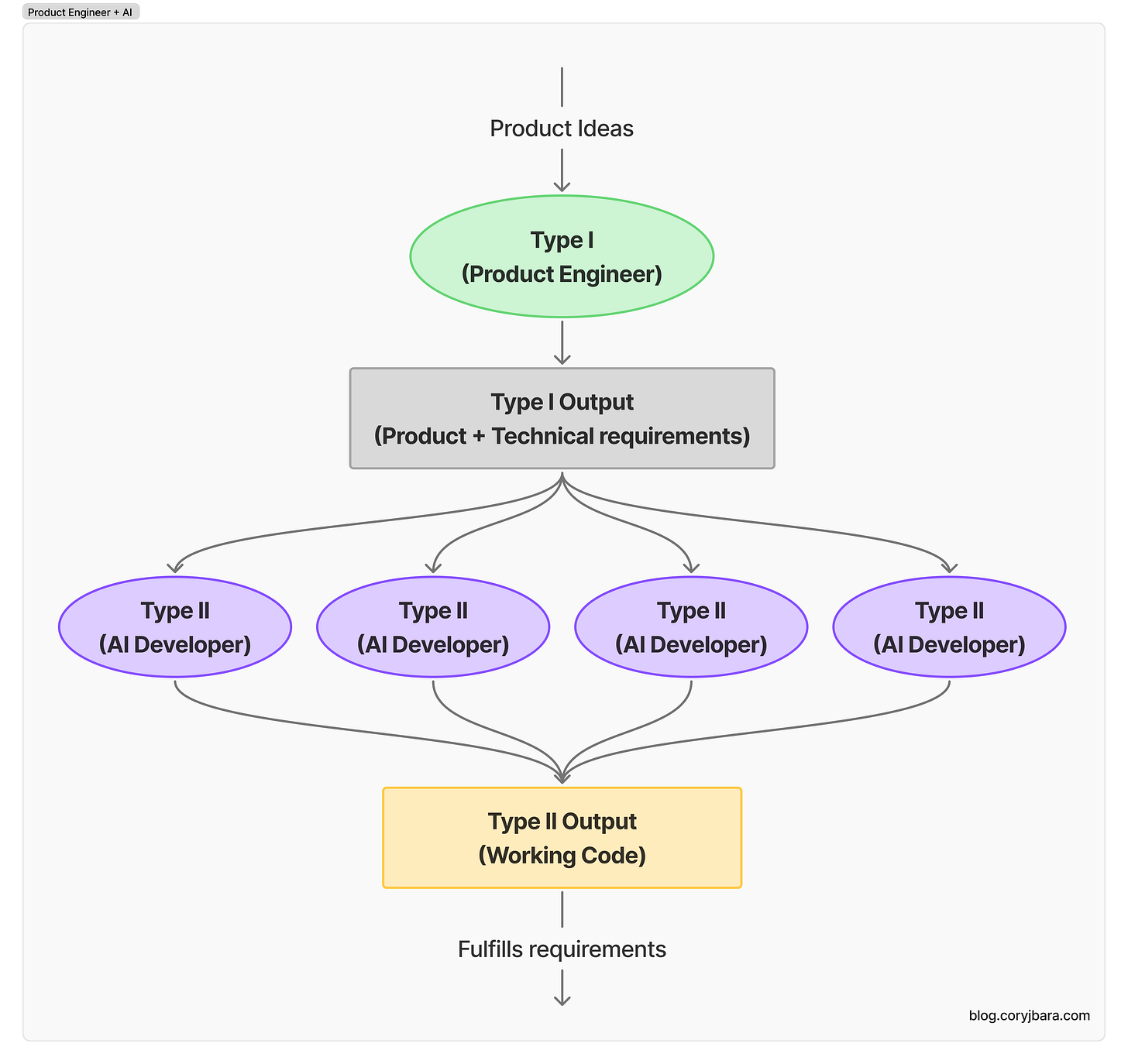

As companies add more AI developers to their team, the AI-delegation does not end at a single branch. Over time, top-tier senior engineers will continue to take on more and more tasks, offloading more and more of the coding to AI. The role of an engineer fundamentally shifts from someone who writes code to someone who coordinates the building of systems and products.

Eventually, for highly skilled product engineers, it will be easy to take high level product ideas, do the Type I work of translating them into both product requirements and technical requirements, and then offload the task of coding to AI:

The limiting factor will no longer be the time it takes to write the code. AI writes code faster than you could imagine. The limiting factor will be the engineer’s clarity of thought: how long it takes to define and articulate the technical plan.

This type of agentic coordination is not easy, requires a thorough understanding of a number of realms, and is why I am skeptical of the people who say they are building companies that have no employees. Things simply do not move as fast as the hype says. However, I do believe this is possible, and I believe some have already figured out how to coordinate their entire engineering department with one person.

The New Bottleneck

This example of the AI-enabled product engineer is one that is easy to understand, because it is happening right now. But over time, I see this type of situation happening for almost every office role. Our work as people will change rapidly, going from doing a lot of Type II work to doing much more Type I work, and delegating the rest to AI.

With AI taking on the tedious burden of the Type II work, for the first time ever, the bottleneck for a team will be decision-making and clarity of thought, not execution.

If you were given unlimited employees, what would you build? That’s the new question in the age of AI. It’s not a matter of getting the work done, it’s deciding what work to do in the first place. So as our roles as humans become more centered around coordination, communication, and making ideas more concrete, we are able to successfully offload.

Effective communicators always had an advantage, but that advantage is amplified dramatically today due to AI.

There’s a danger in the short-term to just lazily hand tasks off to AI without thinking about the relevant context, and hoping for a good result.

However, as we’ve seen in the examples above, to be fully empowered by AI, you must become an excellent communicator, and be an aggregator of context. With clarity of thought, and the context in hand, it is possible to leverage AI to achieve results at a pace that you would not have thought possible just a few years ago.

So if the bottleneck is now decision-making and clarity of thought rather than execution, what does this mean for team structure? If individual engineers can coordinate multiple AI developers, how small can effective teams actually become? And what advantages do small, AI-enabled teams have over traditional large teams?

Until next week,

Cory

Other systems like Cursor Rules help you define additional context for the model so that it can be a more effective pair programmer. This context is fed into each request you make so that AI is not starting blind in a given codebase. Otherwise, all AI has to go off of is the code it has seen before in other projects.

The reality is that adding context to an AI request is hard, because it is scattered across all of our systems, even if it is so clear inside our brains.

I think we will start to see more “AI wrappers” that automatically supply context to the model in the near future. I think these types of apps, such as Lovable, are already creating so much value, because this context burden is hard to overcome.

The process of mentorship is a lot of work for an engineering team, and often it would have been easier for the senior engineer to just do the work instead of delegating. They could just do the Type I product → engineering translation work in their heads, and then write the code themselves.

There is value in mentorship long-term, as it will help more junior people advance and build better software, but it’s worth noting that if efficiency is the only goal, there may not be room for juniors on your team.

I think we are seeing this happen across the board, as companies are getting less patient about results being delivered (i.e. the Type II work being completed), and they cannot take the extra time to hire and train junior engineers. Where does this leave college new grads? I am not sure. Possibly a subject for a future post.

All I know is that I am forever grateful for my mentors early in my career to help me get where I am today.

Learned a lot from this one!

Terrific piece Cory!